Graph Transformers (GTs) has shown promising potential in graph representation learning. GTs, however, have quadratic computational cost, lack inductive biases on graph structures, and rely on complex Positional/Structural Encodings (SE/PE). Recently, Mamba’s outstanding performance in language modeling, outperforming Transformers of the same size and matching Transformers twice its size, motivates several recent studies to adapt its architecture for different data modalities. Mamba architecture is specifically designed for sequence data and the complex non-causal nature of graphs makes directly applying Mamba on graphs challenging.

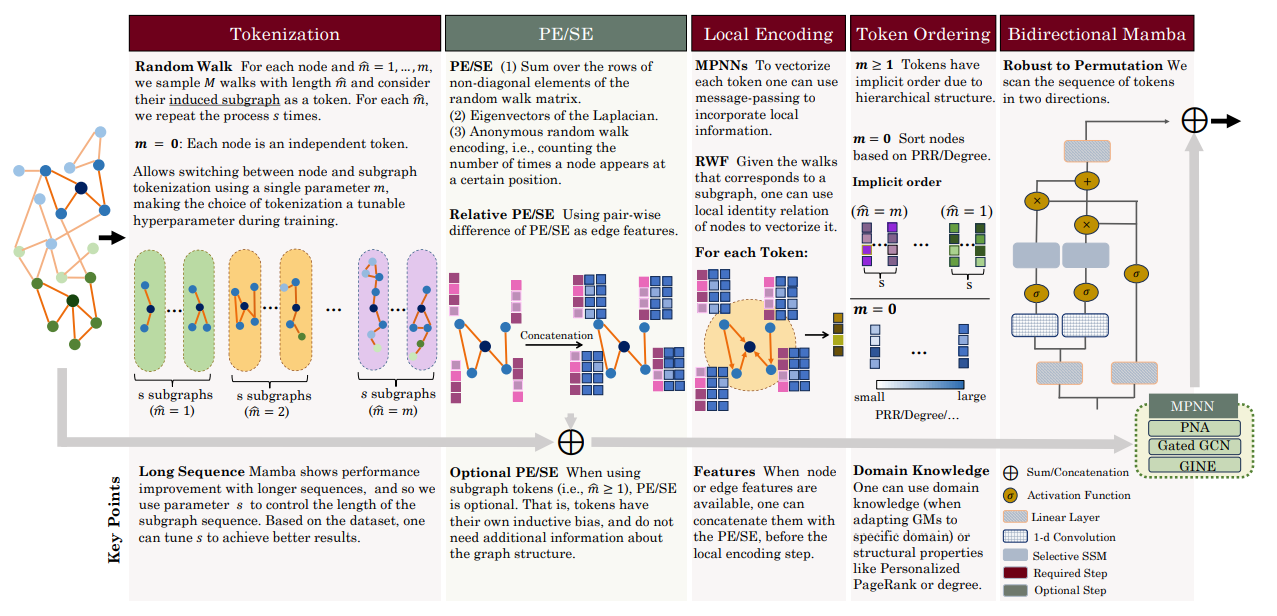

To address all the above mentioned limitations, the paper presents Graph Mamba Networks (GMNs), a new class of machine learning on graphs based on state space models. Recipe for Graph Mamba Networks is simple : (1) Tokenization: the graph is mapped into a sequence of tokens (m ≥ 1: subgraph and m = 0: node tokenization) (2) (Optional Step) PE/SE: inductive bias is added to the architecture using information about the position of nodes and the structure of the graph. (3) Local Encoding: local structures around each node are encoded using a subgraph vectorization mechanism. (4) Token Ordering: the sequence of tokens are ordered based on the context. (Subgraph tokenization (m ≥ 1) has implicit order and does not need this step). (5) (Stack of) Bidirectional Mamba: it scans and selects relevant nodes or subgraphs to flow into the hidden states.

Experimental evaluations demonstrate that GMNs attain an outstanding performance in long-range, small-scale, large-scale, and heterophilic benchmark datasets, while consuming less GPU memory. These results show that while Transformers, complex message-passing, and SE/PE are sufficient for good performance in practice, neither is necessary.

Paper : https://arxiv.org/pdf/2402.08678.pdf

Codes and models will be available soon (Feb 20).